Key highlights:

- To fight AI misinformation through deepfakes, 20 top tech companies made a joint commitment on Friday, February 16th.

- Disinformation in elections has been a major issue since the 2016 presidential race, with Russian entities using cheap methods to spread false information via social media.

- In 2018, a significant event in deepfakes happened with a video of former US president Barack Obama appearing on YouTube.

- The rise of deepfake audio worsened the challenges of digital manipulation, highlighted by a damning audio clip of Iranian Foreign Minister Mohammad Javad Zarif in 2021.

- Tech giants admit that detection and watermarking technologies for identifying deepfakes haven’t kept pace with AI-generated content’s rapid growth and enhancement.

In the evolving landscape of digital media, the phenomenon of deepfake videos has emerged as a potent tool for manipulation and deception. Since its inception, deepfakes have blurred the lines between reality and fiction, raising significant concerns about their potential to disrupt political discourse and sow discord among the masses.

You Can Also Read: DATA PRIVACY CONCERNS IN BANGLADESH’S E-COMMERCE BOOM

Over the years, several high-profile instances of deepfake videos have captured the public’s attention, stressing the urgent need for vigilance and countermeasures in combating this growing threat to truth and authenticity. To fight AI misinformation through deepfakes, 20 top tech companies made a joint commitment on Friday, February 16th, ahead of a significant year of elections impacting over 2 billion people in more than 50 countries.

As of now, the following entities have signed: Adobe, Amazon, Anthropic, Arm, ElevenLabs, Google, IBM, Inflection AI, LinkedIn, McAfee, Meta, Microsoft, Nota, OpenAI, Snap, Stability AI, TikTok, TrendMicro, Truepic, and X.

The participating companies agreed to eight essential steps as part of the accord, including assessing the perils of their models, endeavoring to detect and address such content on their platforms, and revealing those processes to the public. The release indicated that the steps only apply to the degree that they are applicable to the services delivered by each company.

“Democracy rests on safe and secure elections. Google has been supporting election integrity for years, and today’s accord reflects an industry-side commitment against AI-generated election misinformation that erodes trust. We can’t let digital abuse threaten AI’s generational opportunity to improve our economies, create new jobs, and drive progress in health and science.”

– Kent Walker, President, Global Affairs at Google.

They plan to target deepfakes, which can falsify audio, video, and images to make it seem like key actors in democratic elections are saying or doing something they are not, or giving wrong information about voting. The rise of AI-generated content has caused serious worries about election-related misinformation, as the creation of deepfakes has increased by 900% year over year, based on data from Clarity, a machine learning firm.

The impact of Deepfakes on global politics

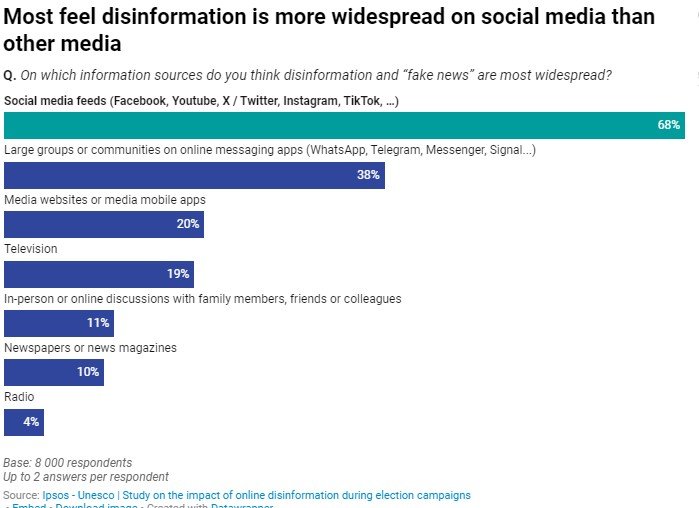

Disinformation during elections has posed a significant challenge since the 2016 presidential race, with Russian entities exploiting inexpensive and accessible methods to disseminate false information through social media platforms. Policymakers are increasingly alarmed by the swift advancement of AI technology, amplifying their concerns about misinformation in contemporary electoral processes.

In 2018, a watershed moment in the land of deepfakes occurred when a video featuring former US President Barack Obama surfaced on YouTube. In the video, Obama appeared to deliver a grave warning about the dangers posed by deepfakes, urging citizens to remain vigilant against their proliferation. However, this video was not a genuine address by the former president but rather a meticulously crafted fabrication by comedian Jordan Peele.

The following year, a video purportedly featuring Prime Minister Narendra Modi circulated widely on social media platforms. In the video, Modi appeared to endorse a regional political party in West Bengal, sparking a political firestorm before its fraudulent nature was exposed. Despite being debunked as a hoax, the video had already reached millions of viewers, exacerbating tensions and fueling distrust within the political sphere.

In 2020, as Belgian Prime Minister Sophie Wilmès became the unwitting subject of a viral windup on Facebook. In the fabricated video, Wilmès allegedly announced stringent lockdown measures in response to the COVID-19 pandemic, causing panic and outrage among unsuspecting viewers. Although the video was later revealed to be part of a prank orchestrated by a television show, its dissemination underscored the profound implications of deepfake technology in amplifying misinformation and inciting public hysteria.

The proliferation of deepfake audio further compounded the challenges posed by digital manipulation, as evidenced by a damning audio clip featuring Iranian Foreign Minister Mohammad Javad Zarif in 2021. In the leaked recording, Zarif purportedly criticized Iran’s supreme leader and military, casting doubt on his loyalty and integrity in the run-up to the presidential election. Despite Zarif’s vehement denials and assertions of the clip’s falsity, its dissemination tarnished his reputation and exacerbated existing political tensions within Iran.

How tech giants are trying to fight deepfakes – and why it’s not enough?

The tech giants acknowledge that the detection and watermarking technologies used for identifying deepfakes haven’t advanced quickly enough to keep up with the rapid improvement and proliferation of AI-generated content. For now, the companies are just agreeing on what amounts to a set of technical standards and detection mechanisms, which they hope will make it easier for users and platforms to spot and flag deepfakes.

“With so many major elections taking place this year, it’s vital we do what we can to prevent people being deceived by AI-generated content.This work is bigger than any one company and will require a huge effort across industry, government and civil society. Hopefully, this accord can serve as a meaningful step from industry in meeting that challenge.”

– Nick Clegg, President, Global Affairs at Meta

However, they also admit that they have a long way to go to effectively combat the problem, which has many layers and challenges. Even if platforms behind AI-generated images and videos agree to bake in things like invisible watermarks and certain types of metadata, there are ways around those protective measures.

Screenshotting can even sometimes dupe a detector. Additionally, the invisible signals that some companies include in AI-generated images haven’t yet made it to many audio and video generators.

The announcement of the accord came a day after OpenAI, the research lab behind the popular text-generation model ChatGPT, unveiled Sora, its new model for AI-generated video. Sora works similarly to OpenAI’s image-generation AI tool, DALL-E. A user types out a desired scene and Sora will return a high-definition video clip. Sora can also generate video clips inspired by still images, and extend existing videos or fill in missing frames. The potential applications of Sora are vast, but so are the risks of misuse and abuse.

“We’re committed to protecting the integrity of elections by enforcing policies that prevent abuse and improving transparency around AI-generated content.We look forward to working with industry partners, civil society leaders and governments around the world to help safeguard elections from deceptive AI use.”

– Anna Makanju, Vice President of Global Affairs at OpenAI

Facing the growing peril of AI-generated misinformation, the joint vow by 20 tech titans is an essential step to boost the digital sphere against the adverse consequences of deepfake fabrication. The flourishing domain of deepfakes necessitates constant invention, and notwithstanding the challenges ahead, the cooperative venture displayed a spark of hope, conveying a joint attitude against the digital alteration compromising the foundation of democratic communication.