In keeping with the trend of fake videos surfacing every other day during this general election season in India, now a new doctored video has gone viral — it shows India’s Union Home Minister Amit Shah advocating the abolition of class and caste quotas. Following a complaint by the ministry, a Congress supporter was later arrested on Monday.

The deepfake clip shows Shah saying, “If the BJP comes to power, it will end the unconstitutional Scheduled Castes (SC), Scheduled Tribes (ST), and Other Backward Classes

(OBC) reservation.” The BJP had publicly condemned the video soon after it went viral.

https://publish.twitter.com/?url=https://twitter.com/AmitShah/status/1785204907114692655#

Mounting an attack on the Congress, Shah on Monday said that the BJP backs reservations for SCs, STs, and OBCs. He blamed Congress for spreading a fake video of him and said that the saffron party would be a ‘protector’ of reservation. “Congress is spreading misinformation that the BJP will end reservations after crossing 400 seats. These claims are baseless and unfounded,” he said in a press conference in Guwahati.

Prime Minister Narendra Modi also accused the opposition of conspiring to disrupt the peaceful electoral process so far by circulating fake videos of the BJP brass, including him and Home Minister Amit Shah.

“They have created fake videos of me, Amitbhai, (JP) Naddaji, and some of our chief ministers. Using AI, they have put words in our mouth that we cannot even dream of uttering,” Modi said while campaigning in Maharashtra, coinciding with the arrest of an alleged Congress “war room coordinator” in Assam for sharing such a video of Shah.

You can also read: Star Candidates of India’s Phase 2 Polling

Earlier in November 2023, a fake video was circulating on social media that shows Prime Minister Narendra Modi doing Garba, a festival dance of Gujarat that is popular across the country. However, Modi flagged the video as fake and said “I recently saw a video in which I was seen playing Garba. I have not done Garba since school.”

The deepfake videos also depict famous Bollywood actors Aamir Khan and Ranbir Kapoor appearing to voice criticism of Prime Minister Narendra Modi’s performance and urging viewers to ‘Vote for Justice, Vote for Congress’ – promoting the opposition party.

These incidents highlight the increasingly widespread exploitation of AI capabilities to manipulate narratives and potentially sway public opinion amid the elections.

The celebrities however denied any involvement in these fabricated clips promoting political parties.

The Indian fact-checking platform BOOM analyzed Singh’s video using an IIT Jodhpur-developed tool called Itisaar and determined it contained an AI-generated voice clone. “Considering disinformation is already a major issue facing the country, the rise of AI-enabled disinformation exacerbates an already problematic situation,” stated Archis Chowdhury, a BOOM senior fact-checker.

Prime Minister Narendra Modi warned about deepfakes posing one of the biggest threats to India, cautioning people to be vigilant about the misuse of new AI technology propagating deceptive videos and images. “We must be careful with emerging technologies. When used responsibly, they can be beneficial. However, if misused, they can create immense problems. Be wary of deepfake videos generated with AI,” Modi stated.

Human rights groups are sounding louder alarms that the average Indian voter in 2024 faces the highest risk of electoral misinformation. Knowledge gaps about detecting reality versus AI fakery leave many vulnerable to deception and disenfranchisement. Social media platforms struggle to contain fake news and propaganda, while the government updates laws to better tackle the surge of online deepfakes. Overcoming AI-enabled obfuscation has become a pressing priority.

How Do Deepfakes Work?

Deepfakes are created by cybercriminals using facial mapping technologies to build accurate facial datasets. AI is then used to seamlessly swap one person’s face onto another’s, while voice matching technology generates an impersonated voice audio track.

Apprehensive about AI-generated deepfakes spreading misinformation, last month the Indian government issued advisories to social media platforms, reminding them of legal obligations to promptly identify and remove such content.

Experts note India lacks specific laws addressing deepfakes and AI-related crimes, though provisions under the IT Act could provide civil and criminal recourse. Some argue that while deepfakes challenge legal systems globally, practical solutions exist.

Pranesh Prakash, a law and policy consultant, said that while moral panic surrounds deepfakes, it’s necessary to clearly identify the actual harms posed and gaps in existing laws. He questioned the IT minister’s urgency for new regulations without clarifying the precise problem or legal provisions envisioned, reports DW.

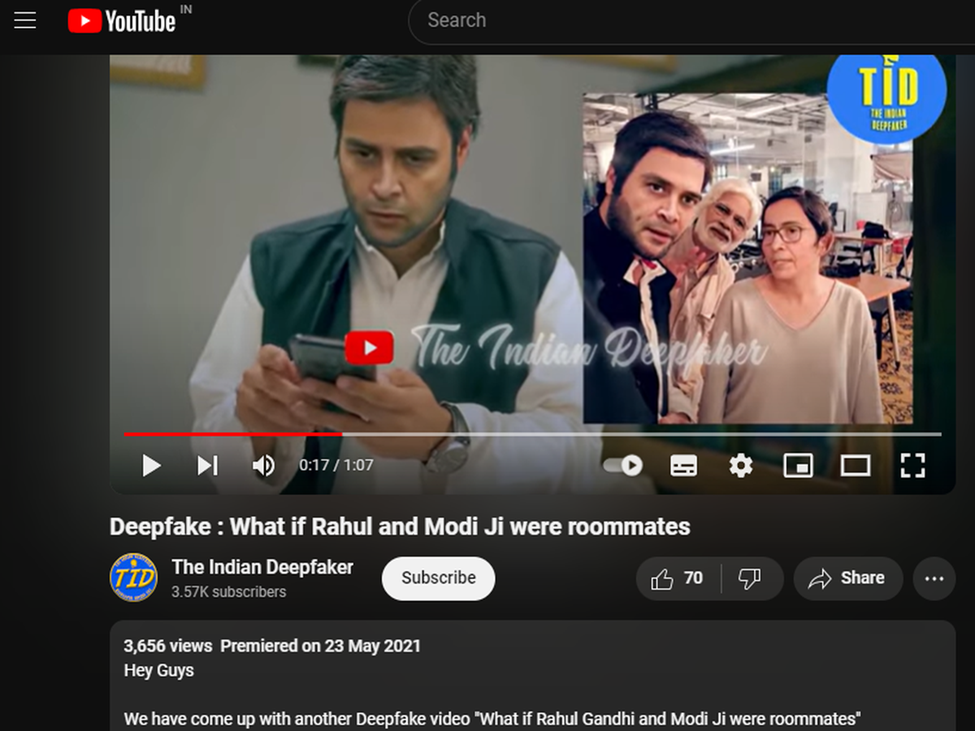

For The Indian Deepfaker’s Mr. Jadoun, a face-swap deepfake video once took 8-10 days using high-end graphics processing units (GPUs). Now it’s a 2-3 minute or low-cost task, made simpler by coding skills. He notes two types of political deepfake requests – enhancing a leader’s image or defaming opponents. While rejecting unethical requests, his company creates personalized deepfake videos for party workers.

Though watermarking content, Jadoun says platforms struggle to detect sophisticated deepfakes beyond low-quality ones. He recalls uploading their deepfakes bypassing Facebook and YouTube filters, and an Instagram deepfake ad going unreported as violating rules.

The key question remains whether social media giants will truly prioritize flagging deepfakes effectively across their platforms.

What Does the Law Say?

Deepfakes are already largely covered under India’s IT rules, which have a framework in place to report and handle morphed images. Here is what the IT Rules say about such media:

Rule 3(2) of the Information Technology Rules, 2021

(b) The intermediary shall, within twenty-four hours from the receipt of a complaint made by an individual or any person on his behalf under this sub-rule, in relation to any content which is prima facie in the nature of any material that exposes the private area of such individual, shows such individual in full or partial nudity or shows or depicts such individual in any sexual act or conduct, or is in the nature of impersonation in an electronic form, including artificially morphed images of such individual, take all reasonable and practicable measures to remove or disable access to such content which is hosted, stored, published or transmitted by it:

(c) The intermediary shall implement a mechanism for the receipt of complaints under clause (b) of this sub-rule which may enable the individual or person to provide details, as may be necessary, in relation to such content or communication link.

In essence, social media companies have 24 hours to take down deepfakes from the time they receive a complaint, whether the media is sexual or political in nature. Anyone can report a deepfake through the platform’s grievance office or India’s cybercrime reporting portal.

Big Tech Giants Hurry to Fortify Their Platforms

As major elections loom in India and the 2024 U.S. Presidential race approaches, Big Tech giants are preparing measures to protect access to truthful information and combat AI-generated misinformation like deepfakes. However, some missteps have already occurred during India’s ongoing polls.

Google, Meta, and OpenAI have assured cooperation with the Indian government and voters, but challenges remain. YouTube plans rules requiring creators to disclose synthetic content, though the timing for full implementation during these elections is uncertain.

Meta outlined plans to label AI-generated content and deploy fact-checkers against deepfake misinformation across its apps, but this will only begin closer to summer. A Meta spokesperson stated their policies prohibit misleading manipulated AI-synthesized media.

OpenAI recently introduced samples from its text-to-voice Voice Engine model capable of naturally cloning voices from short audio clips, though not yet publicly released. However, other voice cloning tools are readily available, including via app stores.

While tech companies pledge cooperation, the practical realities of swiftly detecting and limiting increasingly sophisticated synthetic media during live elections across multiple platforms remain a significant challenge. Robust, standardized safeguards appear to still be in development as campaigning is underway.

A Need for Education

YouTube is filled with unlabeled deepfake videos depicting PM Modi singing Bollywood songs, dancing, or appearing in popular films. However, Jadoun doesn’t believe the creators of such non-watermarked deepfakes intend harm, stating “Memers play a major role in creating awareness about this technology. They’re helping people know Modi isn’t actually singing.”

A screenshot of a video by The Indian Deepfaker, showing a skit that imagines Rahul Gandhi and Narendra Modi as bickering roommates | Photo Credit: The Indian Deepfaker (YouTube)

He acknowledges more deepfake awareness is needed, especially for senior citizens and those from smaller cities. During elections, the risks of false content are amplified. The World Economic Forum’s 2024 survey ranked India highest globally for the risk of AI-fueled mis/disinformation, warning it “could seriously destabilize newly elected governments’ legitimacy, risking unrest and eroding democratic processes.”

Unregulated AI use in electioneering erodes public trust in the process and can manipulate opinions and micro-target communities through opaque algorithms. The Internet Freedom Foundation flagged the “targeted misuse against gender minority candidates/journalists may deepen inequities.”

More evidence will emerge on the intricate links between synthetic media and voting patterns. But deceptive AI content has become ingrained in India’s elections over the past five years, an old tactic in a new disguise. In this battle between real/fake and genuine/generated, India’s democracy and the electorate’s agency face a pivotal test.